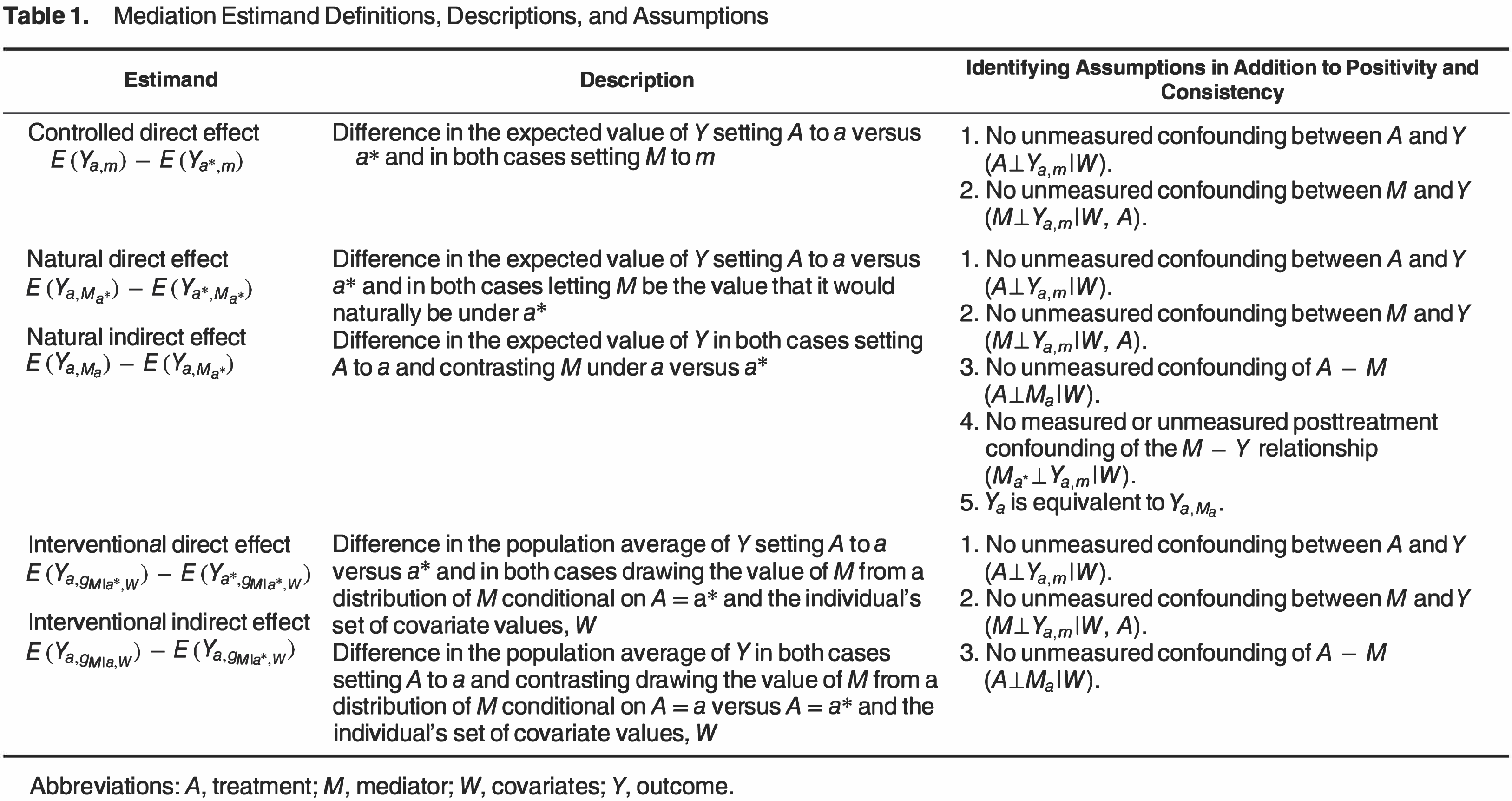

11 Types of path-specific causal mediation effects

- Controlled direct effects

- Natural direct and indirect effects

- Interventional direct and indirect effects

11.1 Controlled direct effects

- Set the mediator to a reference value

- Compare

11.1.1 Identification assumptions:

- Confounder assumptions:

- Positivity assumptions:

Under the above identification assumptions, the controlled direct effect can be identified:

-

For intuition about this formula in R, let’s continue with a toy example:

-

First we fit a correct model for the outcome

Assume we would like the CDE at

Then we generate predictions

-

Then we compute the difference between the predicted values

11.1.2 Is this the estimand I want?

- Makes the most sense if can intervene directly on

- And can think of a policy that would set everyone to a constant level

- Judea Pearl calls this prescriptive.

- Can you think of an example? (Air pollution, rescue inhaler dosage, hospital visits…)

- Does not provide a decomposition of the average treatment effect into direct and indirect effects.

- And can think of a policy that would set everyone to a constant level

What if our research question doesn’t involve intervening directly on the mediator?

What if we want to decompose the average treatment effect into its direct and indirect counterparts?

11.2 Natural direct and indirect effects

Still using the same DAG as above,

- Recall the definition of the nested counterfactual:

-

Interpreted as the outcome for an individual in a hypothetical world where treatment was given but the mediator was held at the value it would have taken under no treatment

Recall that, because of the definition of counterfactuals

Then we can decompose the average treatment effect

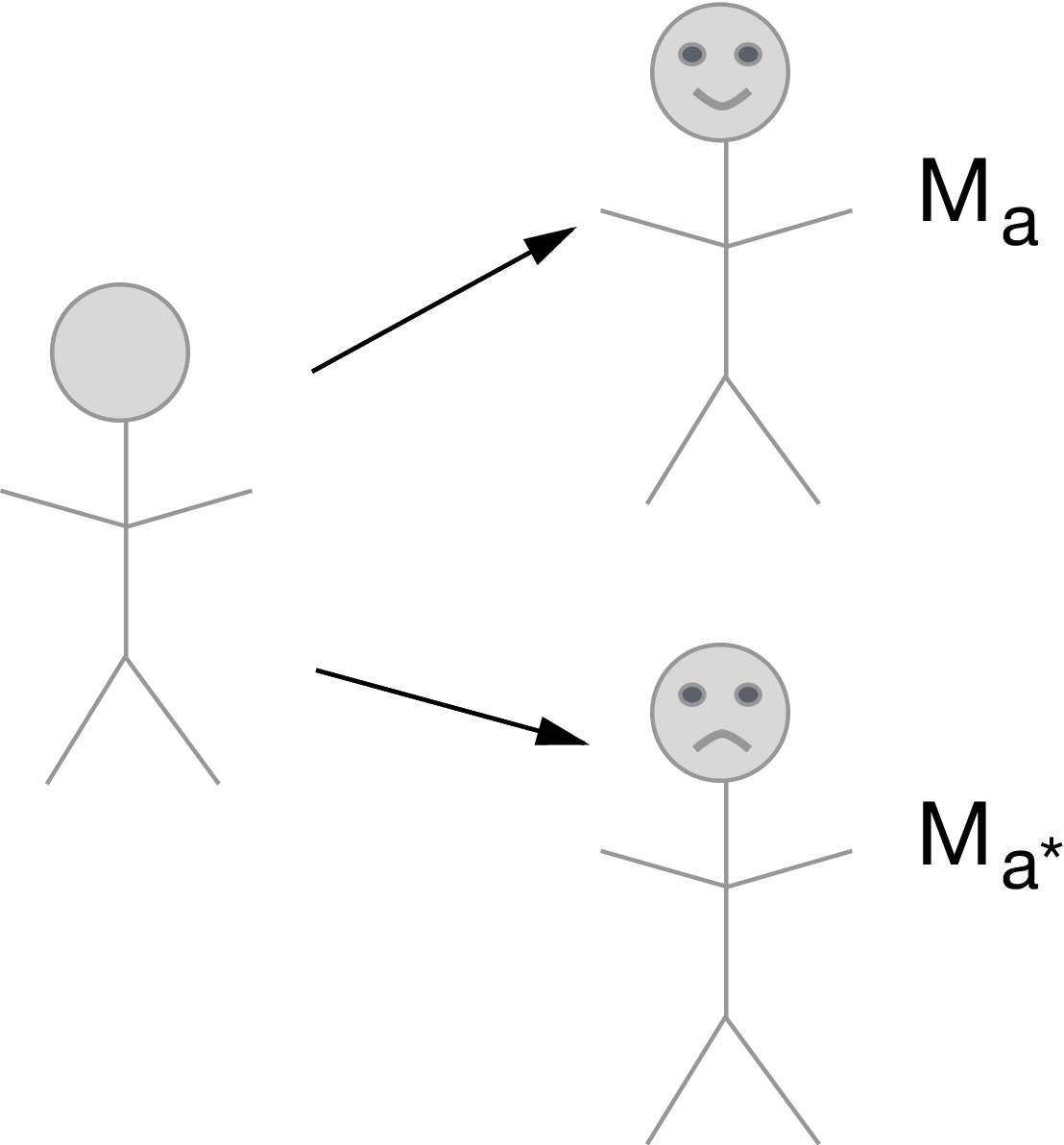

- Natural direct effect (NDE): Varying treatment while keeping the mediator fixed at the value it would have taken under no treatment

- Natural indirect effect (NIE): Varying the mediator from the value it would have taken under treatment to the value it would have taken under control, while keeping treatment fixed

11.2.1 Identification assumptions:

- and positivity assumptions

11.2.2 Cross-world independence assumption

What does

- Conditional on

- Can you think of a data-generating mechanism that would violate this assumption?

- Example: in a randomized study, whenever we believe that treatment assignment works through adherence (i.e., almost always), we are violating this assumption (more on this later).

- Cross-world assumptions are problematic for other reasons, including:

- You can never design a randomized study where the assumption holds by design.

If the cross-world assumption holds, can write the NDE as a weighted average of controlled direct effects at each level of

- If CDE(

11.2.3 Identification formula:

- Under the above identification assumptions, the natural direct effect can be identified:

The natural indirect effect can be identified similarly.

-

Let’s dissect this formula in

R: -

First we fit a correct model for the outcome

-

Then we generate predictions

Then we compute the difference between the predicted values

-

and use this difference as a pseudo-outcome in a regression on

-

Now we predict the value of this pseudo-outcome under

11.2.4 Is this the estimand I want?

- Makes sense to intervene on

- Want to understand a natural mechanism underlying an association / total effect. J. Pearl calls this descriptive.

- NDE + NIE = total effect (ATE).

- Okay with the assumptions.

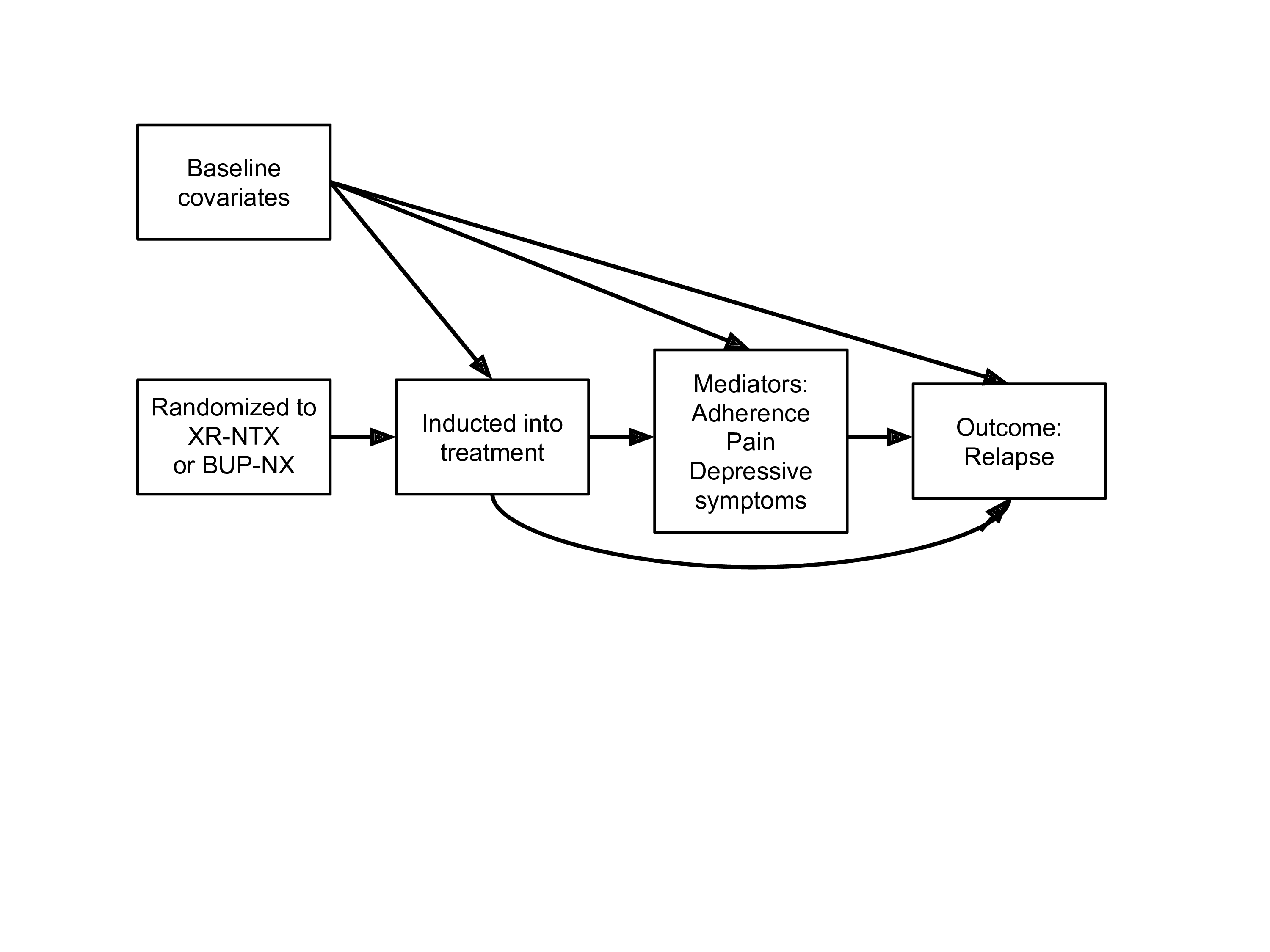

What if our data structure involves a post-treatment confounder of the mediator-outcome relationship (e.g., adherence)?

11.2.5 Unidentifiability of the NDE and NIE in this setting

In this example, natural direct and indirect effects are not generally point identified from observed data

The reason for this is that the cross-world counterfactual assumption

-

To give intuition, we focus on the counterfactual outcome

- This counterfactual outcome involves two counterfactual worlds simultaneously: one in which

- Setting

- The two treatment-induced counterfactual confounders,

- Because

- This counterfactual outcome involves two counterfactual worlds simultaneously: one in which

However:

- We can actually actually identify the NIE/NDE in the above setting if we are willing to invoke monotonicity between a treatment and one or more binary treatment-induced confounders (1).

- Assuming monotonicity is also sometimes referred to as assuming “no defiers” – in other words, assuming that there are no individuals who would do the opposite of the encouragement.

- Monotonicity may seem like a restrictive assumption, but may be reasonable in some common scenarios (e.g., in trials where the intervention is randomized treatment assignment and the treatment-induced confounder is whether or not treatment was actually taken – in this setting, we may feel comfortable assuming that there are no “defiers”, frequently assumed when using IVs to identify causal effects)

Note: CDEs are still identified in this setting. They can be identified and estimated similarly to a longitudinal data sructure with a two-time-point intervention.

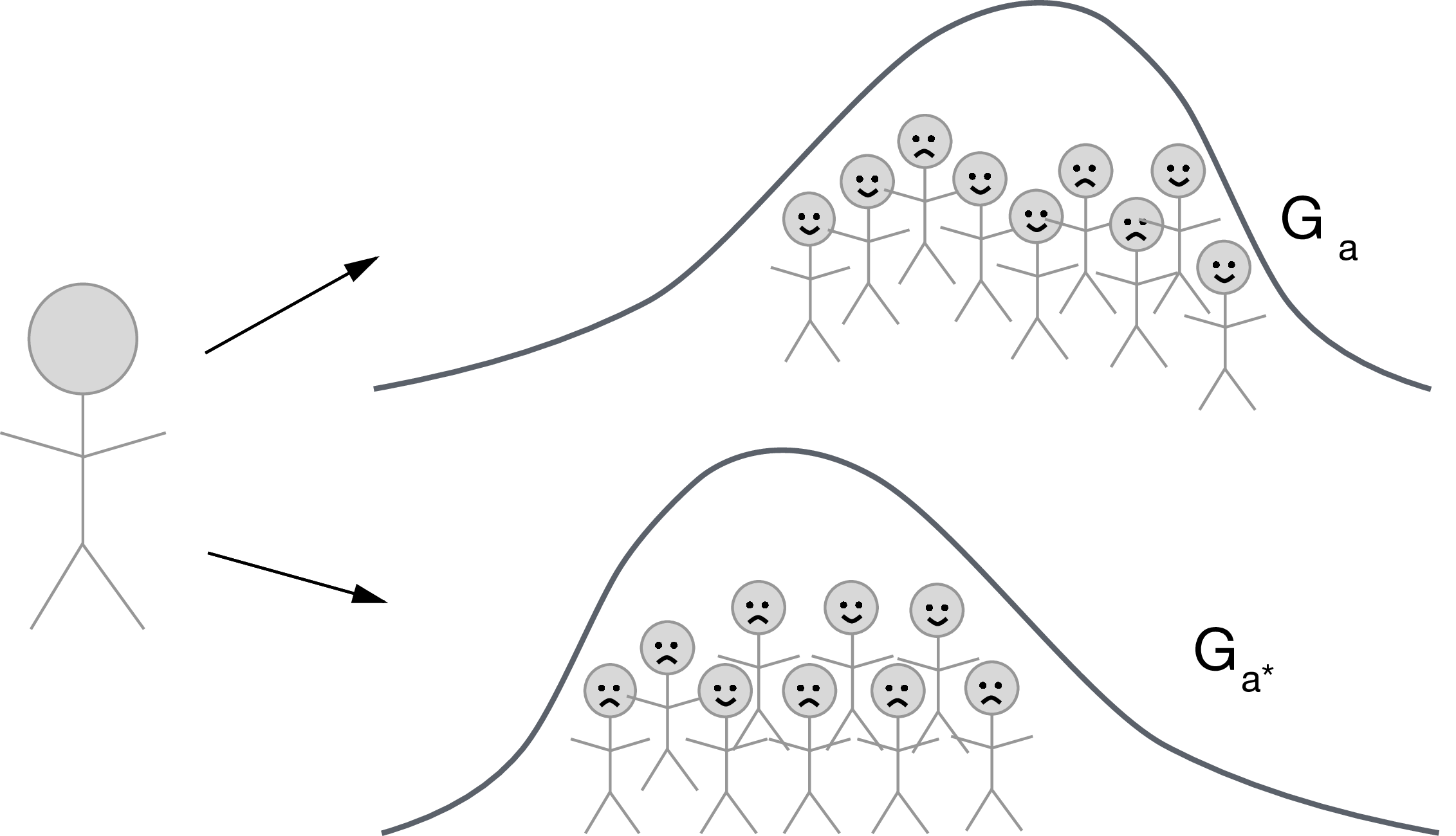

11.3 Interventional (in)direct effects

- Let

- Define the counterfactual

Define

Then we can define:

- Note that

- Above we defined

- What if instead we define

- It turns out the indirect effect defined in this way only measures the path

- There may be important reasons to choose one over another (e.g., survival analyses where we want the distribution conditional on

11.3.1 Identification assumptions:

- and positivity assumptions.

Under these assumptions, the population interventional direct and indirect effect is identified:

-

Let’s dissect this formula in

R: Let us compute

-

First, fit a regression model for the outcome, and compute

-

Now we fit the true model for

-

Now we compute the following pseudo-outcome:

-

Now we regress this pseudo-outcome on

-

And finally, just average those predictions!

-

This was for

11.3.2 Is this the estimand I want?

- Makes sense to intervene on

- Goal is to understand a descriptive type of mediation.

- Okay with the assumptions!

11.3.3 But, there is an important limitation of interventional effects

(2) recently uncovered an important limitation of these effects, which can be described as follows. The sharp mediational hull hypothesis can be defined as

The problem is that interventional effects are not guaranteed to be null when the sharp mediational hypothesis is true.

This could present a problem in practice if some subgroup of the population has a relationship between

More details in the original paper.

11.4 Estimand Summary